Overview

In this post, I’ll be discussing how to plan storage performance capacity via IOPS.

In this post, I’ll be discussing how to plan storage performance capacity via IOPS.

Situation

Let say as an IT Admin, you’re presented with a storage migration task, or a deployment of additional iSCSI Storage for growing application servers, such as Microsoft Exchange Server or VMware ESX Hypervisor. The question is – how does an IT admin determine the performance needed to handle the newly requested duties? I was recently presented with this task, as a growing divisional office was growing beyond the capabilities of their five-year-old storage system – and they were torn between the RS2211RP+ and the RS3411RPxs. Both offer more than enough capacity for their needs – the question was the performance. Well, after further analysis of their storage needs and usage behavior of everyone in the office – it was determined that all that is required is a RS2211RP+ to meet their needs. In this post – I’ll be covering a discussion about the IOP – which is one of two performance benchmarks in the storage industry.

Let say as an IT Admin, you’re presented with a storage migration task, or a deployment of additional iSCSI Storage for growing application servers, such as Microsoft Exchange Server or VMware ESX Hypervisor. The question is – how does an IT admin determine the performance needed to handle the newly requested duties? I was recently presented with this task, as a growing divisional office was growing beyond the capabilities of their five-year-old storage system – and they were torn between the RS2211RP+ and the RS3411RPxs. Both offer more than enough capacity for their needs – the question was the performance. Well, after further analysis of their storage needs and usage behavior of everyone in the office – it was determined that all that is required is a RS2211RP+ to meet their needs. In this post – I’ll be covering a discussion about the IOP – which is one of two performance benchmarks in the storage industry.

What are IOPS?

Previously – to measure a storage system performance capability was to measure its throughput, typically by a single computer. Throughput measures the average number of megabytes transferred within a given period of a specific file size. However, using throughput at the scale of larger storage system isn’t accurate enough – as most of the time, when requesting data from a storage server – it’s typically a lot of small read/writes of packets of data and not a single computer conducting a single large file request. Measuring the storage system’s ability of handling numerous small packets of data requests requires a more advanced benchmark, which is the IOPS.

Previously – to measure a storage system performance capability was to measure its throughput, typically by a single computer. Throughput measures the average number of megabytes transferred within a given period of a specific file size. However, using throughput at the scale of larger storage system isn’t accurate enough – as most of the time, when requesting data from a storage server – it’s typically a lot of small read/writes of packets of data and not a single computer conducting a single large file request. Measuring the storage system’s ability of handling numerous small packets of data requests requires a more advanced benchmark, which is the IOPS.

IOPS (Input/Output Operations per Second) measure the number of operations per second that a storage system can perform. IOPS offers a different way of measuring activity, as it can be used to simulate multi-user behavior – as the cumulative total of user access is essentially the number of IOPS being requested to the storage array at a given time.

How to determine how many IOPS you need

Here is how IT Admins can determine the IOPS currently used by their current applications, which is the best recommend practice by most solution providers. First, evaluate the current performance usage of what the server is performing over a period of thirty days, such as during the beginning of the business day, mid-day, closing hours, and when backups are occurring. From here, a sampling of data can be generated and help determine how many IOPS an application server is using. Here are a couple of examples below.

Here is how IT Admins can determine the IOPS currently used by their current applications, which is the best recommend practice by most solution providers. First, evaluate the current performance usage of what the server is performing over a period of thirty days, such as during the beginning of the business day, mid-day, closing hours, and when backups are occurring. From here, a sampling of data can be generated and help determine how many IOPS an application server is using. Here are a couple of examples below.

Microsoft Windows

View of my Windows workstation, an average of 18 IOPS @ 64K, running multiple office applications, multiple multi-tab browsers, and multiple multimedia applications concurrently.Ref: 1, 2, 3

View of my Windows workstation, an average of 18 IOPS @ 64K, running multiple office applications, multiple multi-tab browsers, and multiple multimedia applications concurrently.Ref: 1, 2, 3

VMware ESXi

View of a production use VMware Server – note that in the image that the performance of the machine does not show high amount of single throughput (about 11.3MB/Sec Read, 1.6MB/Sec Write) – but high amounts of IOPS being requested.Ref: 4

View of a production use VMware Server – note that in the image that the performance of the machine does not show high amount of single throughput (about 11.3MB/Sec Read, 1.6MB/Sec Write) – but high amounts of IOPS being requested.Ref: 4

Estimating IOPS performance needed

Here, is information gathered from various websites, which can help estimate the number of IOPS needed. While this information is useful for providing an estimation, it doesn’t replace conducting an evaluation of the current performance utilization of an application server.

Here, is information gathered from various websites, which can help estimate the number of IOPS needed. While this information is useful for providing an estimation, it doesn’t replace conducting an evaluation of the current performance utilization of an application server.

- Microsoft Exchange 2010 ServerRef: 5

- Assuming 5000 users that send/receive 500 emails a day, an estimated total of 3000 IOPS is needed

- Microsoft Exchange 2003 ServerRef: 6

- Assuming 5000 users are sending 60, receiving 150 emails a day, an estimated total of 7500 IOPS is needed

- Microsoft SQL 2008 Server, cited by VMwareRef: 7

- 3557 SQL TPS generates 29,000 IOPS

- Various Windows ServersRef: 8

- Community Discussion: between 10-40 IOPS per Server

- Oracle Database Server, cited by VMwareRef: 9

- 100 Oracle TPS generates 1,200 IOPS

How to measure IOPS

Typically speaking – IOPS can be measured as 100% Read Event, or 100% Write Event; although this is seldom the case in the real world. Mixed performance can also be measuredRef: 6, along with using typical IOP size (or block size) of 4KB, upwards to 32KB. The number of IOPS will vary depending on the IOP size, where typically, a smaller IOP size will result in a greater number of IOPS. In contrast, a larger IOP size results in higher throughput – but lower IOPS.

Typically speaking – IOPS can be measured as 100% Read Event, or 100% Write Event; although this is seldom the case in the real world. Mixed performance can also be measuredRef: 6, along with using typical IOP size (or block size) of 4KB, upwards to 32KB. The number of IOPS will vary depending on the IOP size, where typically, a smaller IOP size will result in a greater number of IOPS. In contrast, a larger IOP size results in higher throughput – but lower IOPS.

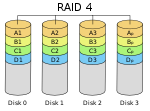

; this expression designates a value between 0 and 1, representing the fraction of the sum of the drives' capacities that is available for use. For example, if three drives are arranged in RAID 3, this gives an array space efficiency of

; this expression designates a value between 0 and 1, representing the fraction of the sum of the drives' capacities that is available for use. For example, if three drives are arranged in RAID 3, this gives an array space efficiency of  (approximately 66%); thus, if each drive in this example has a capacity of 250 GB, then the array has a total capacity of 750 GB but the capacity that is usable for data storage is only 500 GB.

(approximately 66%); thus, if each drive in this example has a capacity of 250 GB, then the array has a total capacity of 750 GB but the capacity that is usable for data storage is only 500 GB. (which is assumed to be identical and independent for each drive). For example, if each of three drives has a failure rate of 5% over the next 3 years, and these drives are arranged in RAID 3, then this gives an array failure rate of

(which is assumed to be identical and independent for each drive). For example, if each of three drives has a failure rate of 5% over the next 3 years, and these drives are arranged in RAID 3, then this gives an array failure rate of  over the next 3 years.

over the next 3 years.